What is Sleep Mode?

Signals generally come in two categories:

1) A constant voltage such as the output from a slider control, which doesn’t vary unless we move the slider control. When the slider is not moved the module goes to sleep, as soon as there is any slider movement, the slider module will “wake” and process/send the change in value, promptly going to sleep again as soon as the value stops changing.

2) A constantly varying voltage, such as the output from an oscillator.

Most modules will detect if an incoming voltage is constant or changing. If it’s constant, or the input drops to zero they shut down audio processing to save CPU. All the time the input is changing the module is processing the signal, if the signal stops changing or falls to zero the module will sleep to save CPU.

Modules which are in sleep mode will usually issue a status signal telling the subsequent module(s) in the processing chain that it’s they can also shut down. Soon all of the modules in the chain will enter sleep mode.

There is a catch however, this is is that the CPU load may briefly spike

when a static signal changes, which can possibly cause an audio click or crackle if the system happens to be running at peak CPU load.

If the signal doesn’t demand isn’t processing audio, always use GUI modules instead of DSP to prevent the possibility of these glitches. And if suitable GUI modules are available, you must always calculate control signals on the GUI processing side.

Sleep mode in action.

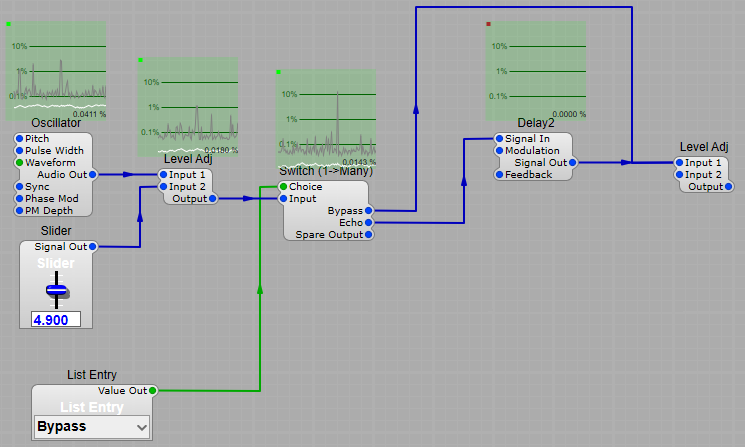

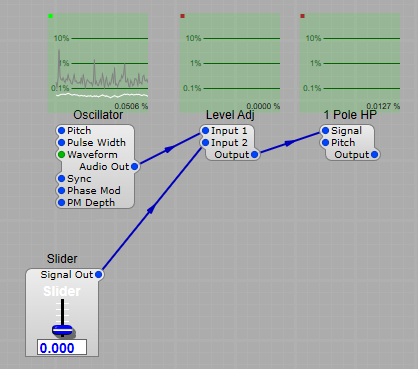

To see the sleep mode in action set up the following structure.

Once it’s set up right click on each module and from the “more” option select “Debug…” you’ll see these dark green windows pop up that show you the CPU activity level. The small green dot in the top left indicates that the module is “awake”, a dark red dot shows the module is in “sleep” mode, and the graph line shows CPU activity.

With the Level Adj slider set at 0 (hence a gain of 0 for the Level Adj) we can see that although the oscillator is active and using CPU, the Level Adj and the 1 Pole HP filter are in sleep mode.

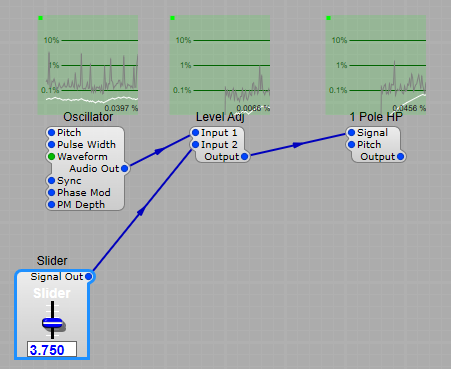

Now raise the slider slightly…

You’ll see both modules “wake” almost immediately. As soon as the Level Adj wakes up it tells the following modules “hey there’s activity-get ready to do some work”. The dots turn green and the modules start using CPU.

Stock SynthEdit modules will all detect sleep mode, but some third-party modules may not (note: most programmers do include sleep mode) because this function is not compulsory for SE. If you suspect a module is consuming more than its fair share of CPU power because your plug in project is clicking, glitching or stuttering, then Debug will help you track down either the module in question, or a point where for some reason your “wiring” is preventing sleep mode from working.

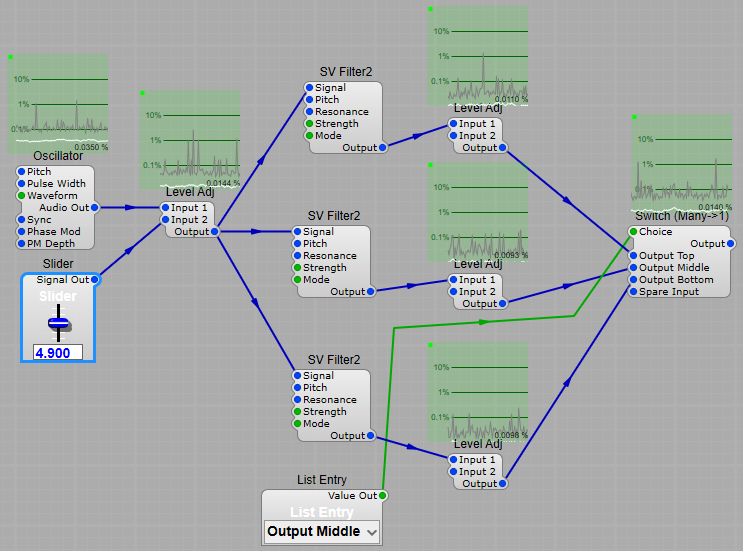

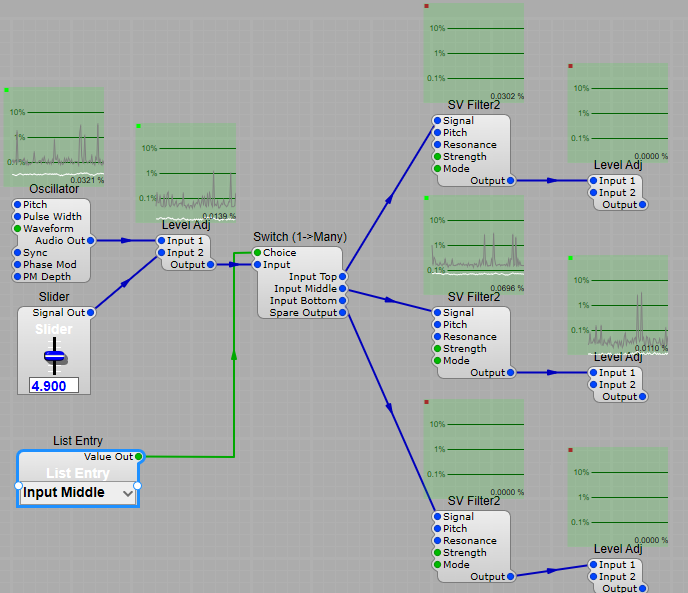

Why is this important? Consider the following, where we have three SV filters and we switch between each one at the output. All the modules are awake as soon as the first one wakes.

Now lets see what happens if we put the switch before the filters… Here we have selected the middle filter, and you can see that the only “awake” chain is the one after the middle filter. Because the Top and Bottom filters have no audio signals being sent to them they are still “Sleeping”, thus we save on CPU by only having the audio processing chain we need to use “awake” and leaving the others to “sleep on”. Try this out, and see each chain go to sleep, and wake as you switch between filters. It’s easy to see that switching the signals between the input of each filter rather than switching the outputs could have a big influence on how much CPU your plug-in will use.

Effects need sleep too!

When putting in a bypass mode for an effect such as echo you should always consider sleep mode too. You can either just put a switch on the input, or both input and output, but not just on the output. The structure below is the best way to put in a bypass mode switch.

Note: Any form of delay module will not go into sleep mode immediately. Why? Because they can’t stop processing the audio till the last bit of echo or reverb audio has died down to a point where it is so low as to be unimportant, then the module will sleep. Otherwise the effect could cut off very abruptly if a module prior to the Delay went into sleep mode! We can’t wait for it to reach total silence. In theory it might never reach zero-or would take a very long time to do so at high feedback levels.